AI Hallucinations: What Public Defenders Need to Know

January 2025

·

5 min read

AI hallucinations refer to instances where AI systems generate false information.

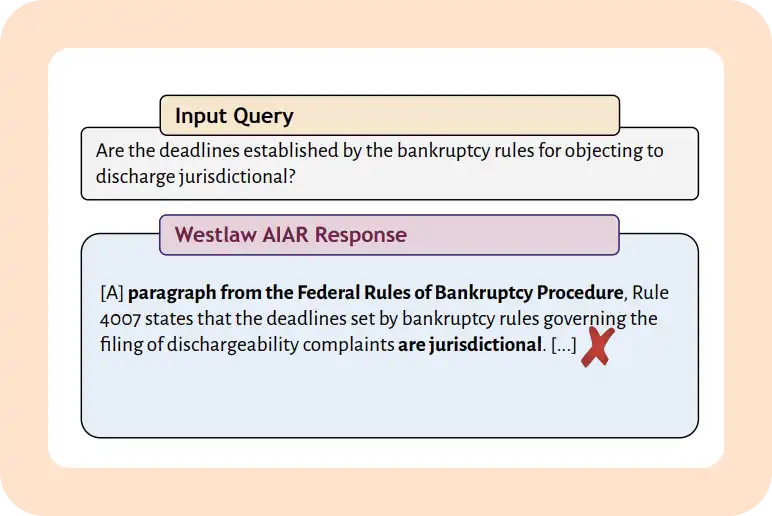

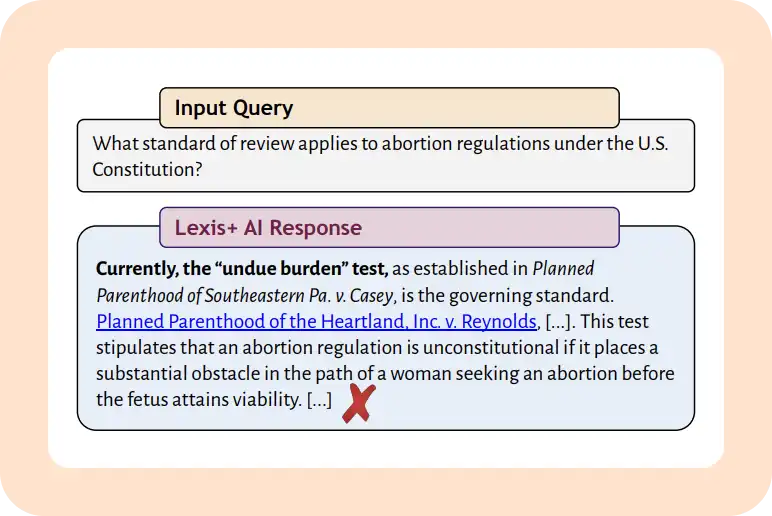

Recent findings from a Stanford study highlight a significant concern regarding AI hallucinations in legal research tools. The study revealed that AI legal research platforms such as Westlaw and Lexis+ AI hallucinate in 1 out of 6 (or more) benchmarking queries.

One notable example from the study involved a hallucinated response by Westlaw's AI-Assisted research product. The system fabricated a statement in the Federal Rules of Bankruptcy Procedure that does not exist.

Another example highlights a hallucination from Lexis+ AI. The system incorrectly stated that Casey and its undue burden standard were overruled by the Supreme Court in Dobbs v. Jackson Women’s Health Organization, 597 U.S. 215 (2022). The accurate standard, however, is rational basis review.

While the potential for AI to fabricate critical information is a valid concern, public defenders shouldn’t avoid AI altogether. With proper understanding, AI can be a valuable tool to manage and analyze this influx efficiently.

As a public defender, how can you ensure that the AI tools you're using are both reliable and trustworthy, giving you confidence in their results for your cases?

When considering the use of AI in legal contexts, it is crucial to understand that not all AI is created equal. Its reliability and effectiveness can vary significantly depending on the specific tasks it is designed to perform.

Let’s explore this by categorizing AI into three types based on their functionality and risk levels:

1. Generative AI

🔴 Risk level: High

ChatGPT is one of the most popular Generative AI tools out there. People use it for a variety of tasks, from composing emails to summarizing information based on their past interactions with the tool.

But can you always count on what it says? Not really.

While it gives answers based on lots of information it has learned, there’s no guarantee that what it says is correct—it’s just putting together insights from different sources.

As AI continues to advance in the legal field, one specific area where it is gaining traction is in helping police officers draft crime reports.

Police departments across the U.S. are using AI to write crime reports. These reports could end up on your desk for review. Although these reports are generated using the details of the crime, there’s no guarantee that they’re completely accurate. Always fact-check and ensure that the AI hasn’t misrepresented any of the details.

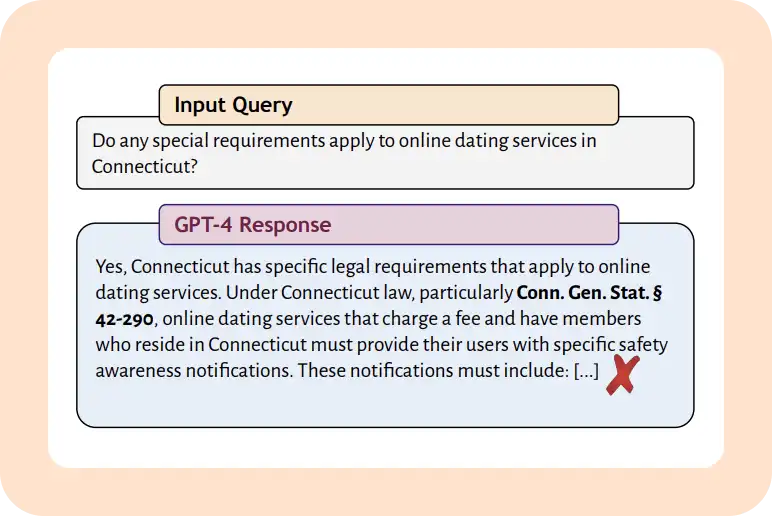

To understand the difference between types of hallucinations: inaccurate content and fabricated details, consider these simple examples:

Types of hallucinations in generative AI:

- Inaccurate content:

Generated text that may seem plausible but is factually incorrect or irrelevant.

For example, a hallucinated response from GPT-4, which generates a statutory provision that does not exist.

- Fabricated details:

Completely invented information that has no grounding in the source data, such as creating non-existent legal cases, laws, or precedents.

For example, in a widely publicized incident, a lawyer for a man suing an airline in a routine personal injury suit used ChatGPT to prepare a filing, but the AI provided completely fabricated cases, which the attorney unknowingly submitted to the court as precedents. Read more about this case here.

As a public defender, you should approach AI-generated content with caution, as it could contain inaccuracies or fabricated details that can impact your case.

If you do choose to use Generative AI, it's important to use platforms that provide transparent sourcing, such as Perplexity, rather than relying on closed systems like ChatGPT. Access to the underlying sources ensures you can trace the information back to its origin, making it easier to verify its accuracy.

2. Q&A systems

🟡 Risk level: Medium

You’ve probably attended a presentation or training session at some point, where a Q&A session follows the presentation. During this time, questions are expected to stay on topic, sticking to what was just discussed. If someone asks something unrelated, the presenter likely won’t address it or ask to stay within the scope.

Q&A systems in AI work similarly. It operates on a specific set of data—such as the documents related to a particular case—ensuring that responses are more focused and grounded in the relevant context.

While the goal is to generate accurate responses, the answers can vary in wording, and determining their correctness can be subjective. Just like how different presenters may phrase their responses in various ways, the AI’s answers might be slightly different, making it harder to pinpoint a single, definitive response.

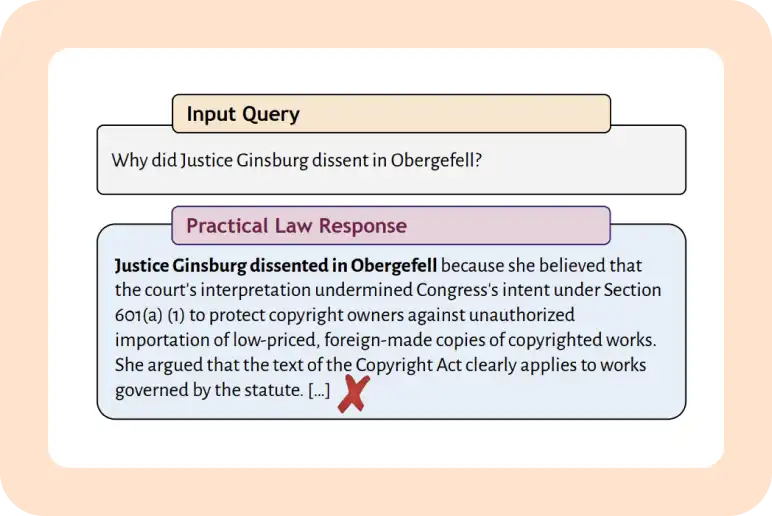

Types of hallucinations in AI Q&A systems:

- Inaccurate answers:

Responses derived from the source data that contains errors or misinterpretations.

For example, Ask Practical Law AI fails to correct the user’s mistaken premise—in reality, Justice Ginsburg joined the court’s landmark decision legalizing same-sex marriage—and instead provides additional false information about the case.

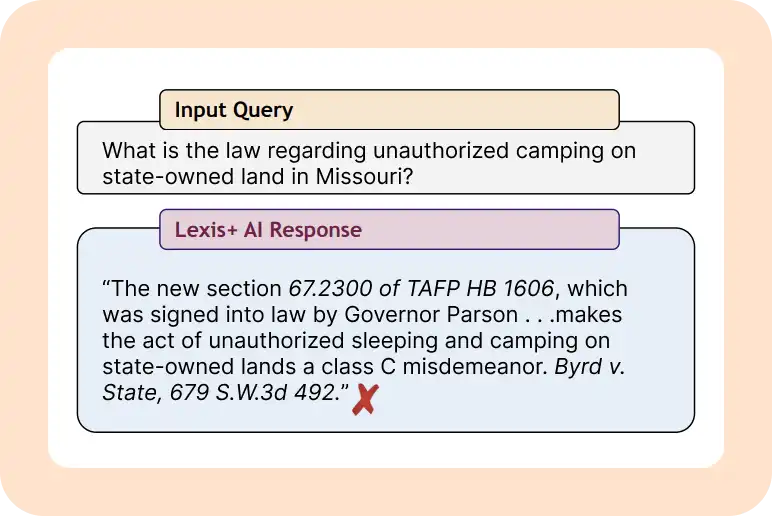

- Incomplete answers:

Responses that omit key details, leaving out important context or critical information. These may provide a partial picture that can lead to misinformed decisions.

In a recent study, Lexis+ AI was queried about the legality of unauthorized camping on state-owned lands in Missouri. The AI correctly cited the relevant Missouri legislation criminalizing unauthorized camping and referred to the Byrd v. State case. However, it neglected to mention a crucial detail: the Missouri Supreme Court had actually struck down that legislation in the same case.

These hallucinations are critical to watch out for, as they could lead to misleading or unreliable information. To avoid relying on incorrect information, always verify answers against primary sources and ensure the AI tool is just a starting point, not a final authority.

3. Single truth systems

🟢 Risk level: Low

You’ve likely encountered these systems in the form of court transcripts, where a human manually transcribes audio or video evidence.

Single truth systems like AI transcription work similarly, converting spoken information from audio or video into text. While both aim to accurately capture the original content, AI transcription is significantly faster and far more cost-effective compared to court transcription, which is often time-consuming and expensive.

As AI transcription continues to improve, their accuracy and reliability are becoming more comparable to human capabilities. Just a few years ago, the accuracy of AI transcription was around 70%, but today, it has risen to nearly 95%. This remarkable improvement shows the increasing trustworthiness of these tools in capturing and converting spoken or written content accurately.

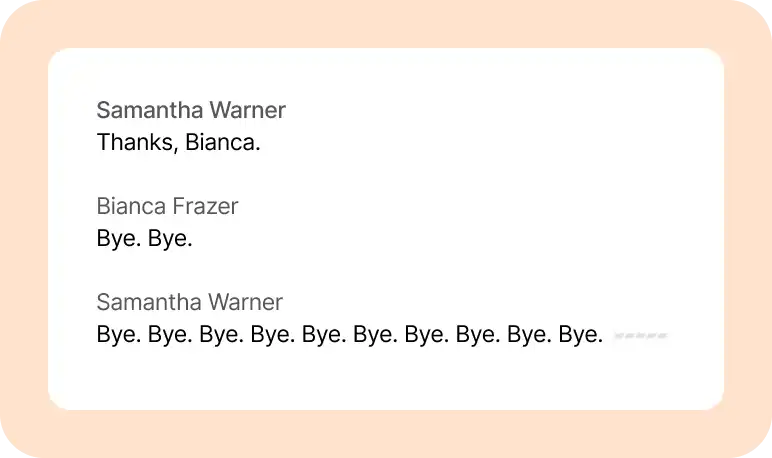

Types of hallucinations in AI transcription:

- Repetition of words:

A word is transcribed multiple times, even though it was only spoken once in the audio.

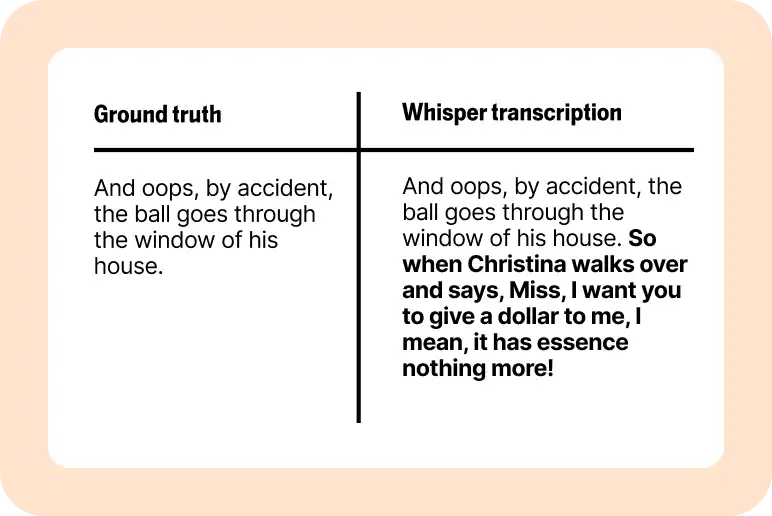

- Inaccurate Associations:

Made-up names or phrases, often from the training data, can show up unexpectedly. For example, in a recent test, Whisper produced inaccurate associations and confusing context.

To reduce the risk of inaccuracies, it’s essential to cross-check transcripts with the original audio or video. Additionally, attention to data security is paramount—ensuring that the transcription vendor handles sensitive information with care.

AI tools can greatly assist with public defenders work, but their value depends on careful use, thorough error-checking, and verification with primary sources.

If you’d like to learn about how Reduct.Video addresses hallucinations and ensures accurate, reliable transcription of bodycam footage, 9-1-1 calls, interrogation videos, jail calls and any other audiovisual discovery, feel free to reach out to us at support@reduct.video.