Censoring Faces in Videos: Legal and Ethical Considerations

December 2025

·

2 min read

Picture this scenario: You've just recorded a stunning video of your travel journey and are eager to share it on Instagram. However, someone approaches you, expresses their awareness of your recording and requests not to share that video on any platform.

Not just travel videos, you might face this type of situation when recording documentaries, user interviews, meetings or videos for social media.

So what course of action would you take?

A quick and easy solution could be censoring- also known as redacting- the faces and other personal identifiable information (PII) that are in the video. You can blur, cover or pixelate faces and PIIs to censor- but make sure that those effects are irreversible.

Once it’s done, you can then post your content publicly without fear of consent or privacy.

In this article, we'll explore the what, why, and how of censoring faces in videos, while addressing legal and ethical implications.

What is censoring faces in videos?

Censoring faces in videos involves strategically obscuring or blurring the faces of individuals to protect their identities. This practice is often used when sharing videos publicly, especially when subjects haven't given consent for their faces to be shown.

Censoring faces serves as a tool to navigate the balance between sharing content and protecting personal information.

Why censor faces in videos: Legal and Ethical Considerations

There are many legal and ethical considerations that we have to be aware of when releasing content publicly. On top of consent, we also need to know if there are any other legal bars.

Legal Considerations

-

HIPAA (Health Insurance Portability and Accountability Act): HIPAA guidelines protect patient health information (PHI). If your video involves healthcare settings, it's vital to censor identifiable PHIs such as patient’s faces, medical records, patient number in medical recordings.

-

GDPR (General Data Protection Regulation): For videos containing personal data of European Union citizens, GDPR mandates protecting individuals' privacy rights. Censoring faces might be necessary to avoid violations and hefty fines.

-

FOIA (Freedom of Information Act): In certain cases, videos can be subject to FOIA requests. Balancing transparency with privacy, censoring faces could be required before sharing videos publicly under FOIA.

Ethical Considerations

-

Consent and Release of Videos: It's crucial to obtain consent from individuals before recording and sharing videos that feature them. Even when censoring faces, respecting individuals' autonomy is paramount.

-

Creativity and Integrity: Censoring faces, maintaining video quality and ensuring that the obscured areas don't accidentally reveal identities- all require a creative touch. It’s crucial to strike the balance between protection and clarity.

How can you censor faces using Reduct

To censor faces using Reduct, follow these simple steps:

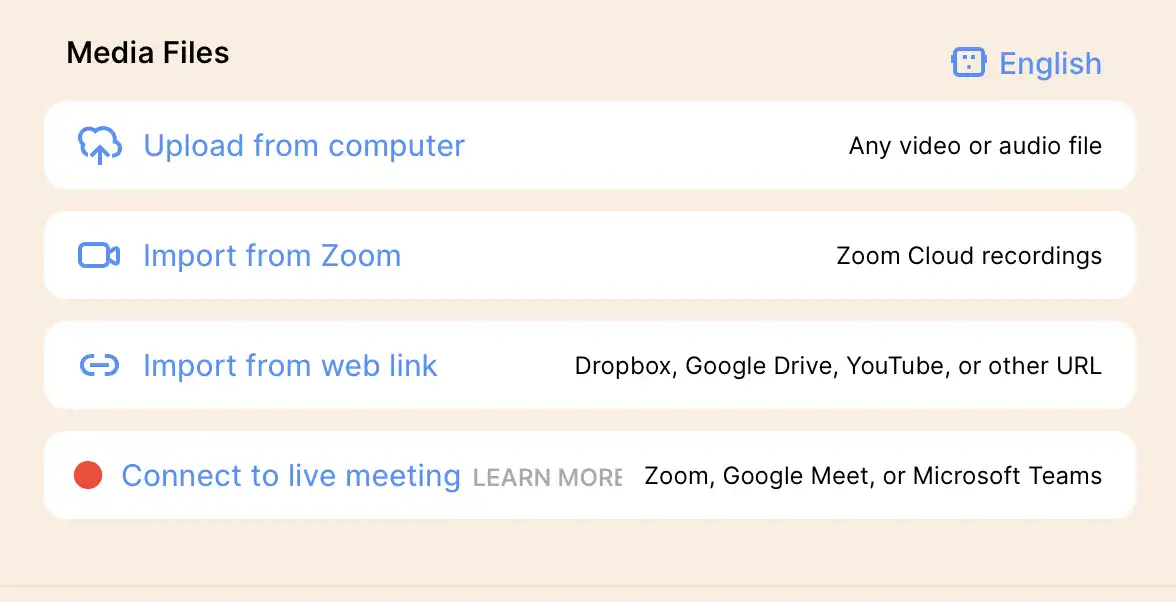

1. Upload Your Video

To get started, upload your video from your computer. You can also import videos from the web (Zoom, Google Drive, etc.) by pasting a public URL.

2. Identify parts of video to censor

Once you upload your video, you will get automatic transcripts. You can use the transcript to search through the video and navigate to the exact moments in the video that need censoring.

Once you find the relevant parts, you can simply select and highlight the transcript for redaction.

3. Apply the blur effect

After you highlight the transcript, you can click on the redact button and apply blur to the faces or PIIs. You can then adjust the position of the blur, ensuring that the faces or any specific parts are completely covered to maintain confidentiality.

Once you are done, you can download the redacted video and share them publicly.

Balancing content sharing and personal privacy

By understanding legal and ethical considerations, obtaining proper consent, and employing tools like Reduct, you can strike the right balance between sharing content and safeguarding personal identities.